It’s time to design for trust

(upbeat rhythmic music) - Morning everyone, thank you very much for having me. I was planning today to start with a story and I ran it by my wife and she advised against it so I'm gonna do something slightly different. I unfortunately missed both Sarah's presentations. I promise I don't have anything against Sarah's, it just happened to go down that way, but what I have done whilst observing in the audience is just take a few notes.

The reason I did that is early on yesterday I started noticing some fairly consistent themes. Now, I can't promise you but I will do my best to try and tie those themes into my narrative today. So, really quickly when we think about what Stephanie spoke about, the thing that stuck out to me was that the attention economy, the kind of status quo of the digital economy today was designed and I think that was a beautiful insight. Sometimes we forget that and it happened quite a long time ago, so thank you Stephanie wherever you are.

Stephan, I love people that say the F word on stage so I made, I don't know where you are, but you're cool in my books.

The fact that the internet is dying, I fundamentally believe in that.

Centralised power, surveillance capitalism as the attention economy is sometimes known, decreasing agency, or at least the view that agency is decreasing.

Now, when it comes to distributed ledger tech, there are many uncertainties and I think highlighted limitations but in terms of the broad underlying protocol, it's the type of thing that we as designers probably should be paying attention to.

It may well impact some of the stuff that we do going forward.

Eduardo is right here in front of me so I can, mate, I didn't know that you're a famous actor. Can I get your autograph afterwards? And look, that was really brilliant.

The thing that I loved most was the just like reframing iTracking, and I'm interested to see what Jared Spool has to say about that.

Has there been any Twitter banter yet? I haven't been on Twitter? John, no? Mate, send him a Tweet.

Dave, we had a chat yesterday, amazing job to be done, sort of intro.

If you're familiar with Jobs Theory and I did actually, I questioned Dave on this, sorry I can't really see anyone properly so if I'm looking at you and you're not Dave and you think I'm weird, just, I'm cool with that. There are a lot of competing ideas in the job to be done practitioner field and there are two guys specifically that are like going at each other at the moment. Both have valid points, both have fantastic proof points, I think when it comes to jobs to be done try out a bunch of different stuff and this is what Dave was saying, he's kind of come up with his own tool kit based on a bunch of other stuff that other practitioners do.

Love that approach so thank you.

Facebook guys, we had, and Holly, we had a good laugh last night and although it wasn't explicit and it wasn't something that you mentioned, this was my key take-away from your presentation, totally biassed but you know, it was actually about trust, I think trust in the process, trust in the strategy, and trust in the value of working together to solve problems more effectively.

So, thanks Ben and Jamie.

Dianna, I love the idea of diving into the evolving role of the designer. Opportunity cost is something that I as a business owner have to think about all the time but sometimes when we're engaged in the practise and the process of design, we do forget that, right? And I love the fact that you brought that up. Focus really matters.

And this thing, remember the designing code is a false dichotomy so thank you so much for bringing that up.

Rem, mindfulness, oh, man.

Mindfulness has actually played a huge role in my life, like it really has.

But I never like consciously thought about it in the context of my practise and so that reframed my thinking a little bit and I was like shit, and I caught myself on the phone a few times but I was writing notes, Rem, so wherever you are, I had a purpose.

And you know, I'm gonna be a dad soon, which is really exciting, and mindful listening, like I, you know, speaking of me being on my phone whilst Rem was speaking, but when I thought about that in the context of being a parent I was like, wow, that's really powerful.

So, personally that was probably the biggest take-away. The fact that you brought up Centre for Humane Tech and that was brought up a couple of times yesterday. Hillary and I were speaking last night we spoke about something called the tactical technology collective, it's tacticaltech.org.

They're based in the UK, I suggest checking them out if you're interested in the space.

They do some really interesting stuff.

Coryann, I loved how you challenged us to reframe how you think about the future.

It was just a brilliant presentation 'cause it just was unexpected.

I just really liked it and although I like poker, I'm never playing with you. It's just not a good idea.

I'll lose my money.

Dallah, a great way to end the day.

I think conversation design as a broad discipline, broad area of focus is super interesting.

Really loved the pyramid, broken down front end verses back end, that was quite impactful.

I thought that kind of synthesised a fairly complex set of capabilities, team compositions, however you wanna look at it in a really nice way.

Actually first working conversation design and financial services a few years ago and even like, just when you're getting started, the conditional logic and stuff like that, it's tough, like it really is hard and I appreciated that you exposed the difficulty that you guys are going through at one of the biggest companies in the world but I am, I'm really excited about conversational design and I love this, the magic is in the voice. I wish you'd have finished with that, actually. Like, the magic is in the voice and then just walked off.

That would have been really cool.

Hillary and I, we actually conspired together to make up our presentations so we didn't really, but when I think about what Hillary's talking about and IEEE's work, what I'm actually gonna be chatting to John Havens, the chair of the IEEE's ethics board next week, it is probably one of the biggest challenges and opportunities that we face.

And I agree with Hillary fundamentally that we are in a unique position of power.

I'll talk a bit more about that soon.

So, to bring it all together, key themes, diversity and inclusion, that's the big one.

I'm not gonna say too much more about that. I'll hopefully touch on it throughout the presentation. Designing for people not users.

I fucking hate the word users.

Again, I'm not gonna go into it, but I think we have to focus on designing for people. A lot of people used the word human beings yesterday. I don't know, that still sounds a little bit like Navalua, Harari, or whatever, the guy who writes sapiens. It seems a bit like that to me, I just like the concept of designing for people and again that's me synthesising, this is not gospel up here but I really took that away.

I think people are starting to focus on designing for people and not users.

And lastly, ethical and trustworthy design. Right up my alley, so we will kick off as soon as I relog in and I'm gonna start by talking a little bit about what's going on in the world.

I'm gonna skip the whole introduction to me. I don't think it's gonna add a huge amount of value given that we've only got 20 minutes.

So what is going on in the world, I'm gonna start with data breaches.

Everyone's spoken about Facebook and Cambridge Analytica, guys, I'm not gonna stand up here and have a crack at you. It's certainly not your fault.

The issue that we're facing is much more systemic than any one company anyway and it was a data policy breach, not a data breach in any case.

The one that I like to talk about the most is Equifax. It's not because of the scale or anything like that, it's not even because they were social security identifiers, it's actually because executives three days after a completely preventable data breach, which is just the result of gross negligence, they sold 1.8 million bucks worth of stock. Like, good for them, right? And then 37 days later, that's when the notified the regulators.

It's kinda like, what are you thinking? Like, seriously.

That is not the type of leadership behaviour that we expect and it's certainly not the type of leadership behaviour that inspires trust.

So data breaches are on the rise, they're proliferating, in terms of impact, in terms of quantity and frequency, again, not gonna touch on it too much but we have to be aware of this stuff because there are consequences to businesses, the average data breach costs about $3.6 million, the average and it negatively impacts people's lives. People died or were killed or committed suicide after the Ashley Madison hack.

That is serious shit so we need to be thinking about data breaches very, very seriously.

Internet of everything or connected devices, I think IDG's estimate is something like 20 billion connected devices by 2020.

Like, that's a shitload of surveillance capability, right? Not inherently against it.

Absolutely not inherently against it, but if you go speak to an IOT security specialist or you go speak to a customer identity and access management company that focuses on connected devices like for DRAC, they'll probably tell you that a heap of the security and the privacy stuff that we need to figure out is yet to be figured out.

So, we're innovating, we're doing good stuff but then it's like, oh, shit, what's gonna happen if something bad goes wrong here? We just need to be conscious of it.

Standards, the IEEE's work, it's really good. Like, you should check it out.

It's worth the investment.

Big-ass report, but maybe get the short one to start off with and then if you have an appetite for it, dive deeper. Other interesting stuff is happening.

Come on, this might keep happening so I'll walk over every now and then.

Kentara has released something for consent, a consent receipt specification and standard. Consent is something that in the EU many organisations are trying to rely on as their legal justification to process data. It's probably gonna end up being the same here in Australia.

Consent will probably be the processing justification for things like open banking, consumer data rights, so that type of stuff is worth familiarising yourself with.

Another one that's just super cool, like if you do data work, is the classification of everyday living, the cold standard, behavioural taxonomy, really, really impressive, robust work.

So standards are emerging in a variety of different contexts.

Digital ID is another one that we should probably pay attention to.

The reason that I think it's important is 'cause we don't always have to solve these problems. We can tap into stuff that exists, really good work. And standards, more broadly, should enable us to achieve inter-operability faster, which is absolutely critical if we're to realise the potential of sharing data. Consumer behaviour, man that's like, that's pretty broad.

So I'm gonna go narrow, 'cause I've only got a few minutes.

The thing that I'd like to touch on, is too forward, I suppose.

So when I go into organisations and I've had the good fortune of working with many of the world's leading organisations, focusing specifically on this space, and sometimes I speak to executives, and they basically say, "Man, no one cares, so why should we?".

And interestingly, when I carefully guide them through the data that we have on this, they learn quite quickly that people do care. Like Hillary was saying, people feel like they've lost control.

It's almost like there was a war, they lost it, miserably.

And now they've given up.

It's a bit sad really, but it's also been designed.

Like we, we make it so hard for people to understand how we're using their data. Like, do you expect someone to read 26 page terms and conditions? Give you an example, right.

Seven lawyers tried to interpret Apple's, and Apple's often sided as like the privacy king.

26 pages, seven lawyers, seven days.

Had no idea what Apple was doing with the data after it.

What chance do we have? The answer's none.

Business models, again, surveillance capitalism, attention economy, that's kind of the defactor, unless you have a SAS play or something like that, which is cool, is more of a, you know a value exchange. What's interesting though, is there are emerging business models in the personal information management services sector, like PIMS.

When someone first said PIMS, I was like, "I'm so thirsty".

And then I was very very deeply disappointed. Their business models are much more participatory, as in, they will make use of your data in some type of way, right? They'll orchestrate the flow of your data, and then if it creates a valuable outcome for you they participate in the value.

Isn't that a novel idea? Very cool.

Data regulations, GDPR, e-privacy regulation, hands up, who knows about those regulations? Who's heard about these regulations? A few of us, that's actually pretty good.

I keynoted a big event in Germany last year, and the Germans are terribly privacy conscious. Like, really really progressive in terms of how they think about privacy.

It's a massive room and like two people put their hands up.

So that's really impressive, well done.

Basically what the European Commission are trying to do, is digitise the EU charter of human rights.

We've never had that before.

We really haven't had rights online.

So that's super exciting.

Hands up if you've heard of Consumer Data Rights? Here in Australia? Oh that's surprising, less.

Well that's basically coming into effect now, and it's probably going to impact all of us. I'd suggest checking it out.

There's some good guidance, it's not super long, it's not like the GDPR, you don't have to read through like, you know, 400 pages worth of stuff.

Check that out.

And then the other thing is Mandatory Breach Notification.

Hands up if you've heard of that? A few more people.

Okay, interesting.

So all of those things are happening, right? And again, like this isn't a five minute snapshot state of the world.

It's a five minute snapshot state of the world in the context of data trust.

So, be explicit about that.

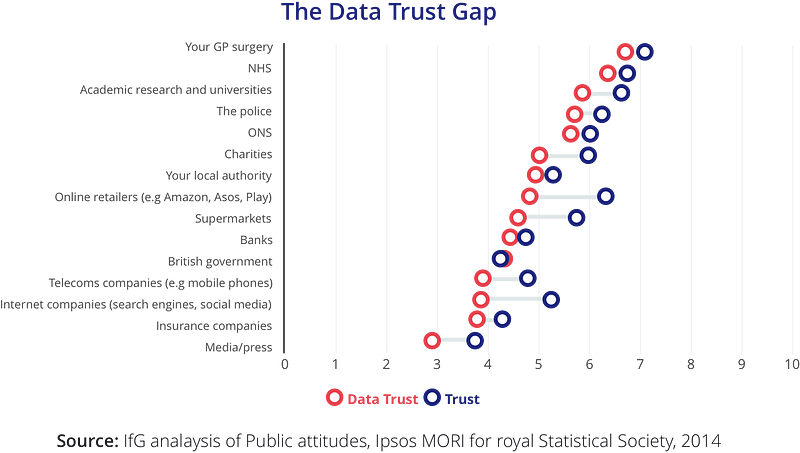

But what has it led to? Well, in 2017, who follows the Eldman Trust Berometer? Anyone? Interesting resource, really good resource. In 2017, trust was at a measurable all time low, and it was across, like we weren't discriminating, right. Like trust was at a measurable all time low, across industries, across borders, all that type of stuff. This year the data's pretty similar, pretty stagnant. Now, what's interesting, given the context of what we do, at Greater Than X, is the Data Trust, so the trust a person places in an organization's data practises is even lower.

So lets just say I trust my bank, right? To manage my money.

I don't really have a great relationship with my bank. I'd move at the drop of a hat if there was something better.

But I trust them kind of, right? I trust that they will do the right thing by my money, I kinda trust in that they're delivering me a kind of appropriate value proposition.

But if they came to me, if Commbank came to me and said, "Nathan, can you give us some of your health data for this thing?".

I would, "Excuse me? What the fuck do you want that for?".

You know, it just doesn't align to the context. And so, when we measure data trust, at the moment people have a very low propensity to willingly share.

Remember, today's status quo is that we're forced to share, we don't have a choice.

Zero sum game.

Tick the box or you get nothing, right? You bugger off somewhere else.

We're talking about willingly sharing.

So, there's not really a definition for data trust. Actually in our playbook you'll notice we came up with a definition for data transfer, and see that I'm really happy with, 'cause it was like, there was nothing, I was like googling and all this type of stuff, like it, worst definitions, not meaningful at all.

But basically that's the thing there, that's the key thing, will they willingly share their data? Again, we've had the good fortune of working with a bunch of big brands on this. I'm very much struggling with this clicker. So if you see me looking stressed, I'm pretty comfortable up here speaking to you, but that, like that's getting to me.

And, we've noticed a lot of consistent themes. And, one of them that was particularly pressing, was that some organisations, they kinda had an inability to get started.

It was like they were all intimidated by the challenge.

They needed to go from zero to one, you know, the trust gap that they face today to, not even a return on trust, not trust as a competitive advantage, but just get moving in the right direction. So we partnered up with the Data Transparency lab in Barcelona and we put together these three plays, put them into practise, and eventually converted it into a playbook. That was one of the big things of my presentation, John, and you gave it away, so, it's gonna be a bit underwhelming at the end maybe.

Let me talk about these three plays really quickly, and they are contained in the playbook, so it's not like you need to have me or one of our team members in the room to execute them. Play number one is about getting to know the market. Now, I spoke about this at a really high level a couple of minutes ago.

Getting to know the market doesn't mean listening to some random guy on stage for five minutes, it means establishing a clear point of view. It means getting to know the behavioural regulatory and technological drivers that are impacting your ecosystem position. Again, establishing a point of view enables you to have a potentially meaningful role within the ecosystem that you operate, and inter operate within. Play number two is about getting to know your customer. Dave is probably well aware of this, it's like, it's so rare, that organisations understand the situational context, in which their value proposition becomes relevant. There is a systemic misunderstanding of the job to be done.

Now getting to know your customer isn't just about jobs to be done, but it's certainly part of it.

It's about getting close to the person that you intend to serve.

You cannot become inherently trustworthy, if you don't know who you serve and you don't deliver a valuable, meaningful, and engaging proposition.

So once we've done that, we work with organisations and the playbook helps with this, to evolve design practise.

Now I don't mean some crazy, elaborate, $100 million idea and design programme, I mean some really simple stuff.

We take two specific approaches.

Remember, we're going from zero to one, not from one to 100 yet.

Zero to one.

First is, we've kind of dubbed, it's not really official but, like data trust experience mapping.

Just taking like, existing service and experience design tools that basically every single one of us are super familiar with, and it's just adding a different layer.

So it's really easy to adopt, it's really easy to make use of, and it gives us some pretty good qualitative insight.

When we start with qual we tend to back it up with quan.

So approach number two is data trust design experiments.

We use an experiment card, something we created at Greater Than X, basically it just makes experiments systematic and repeatable, and that'll enable us to put to the test some of the hypotheses that we framed.

We wanna support or review what we think we know about how people might choose to share or not share, when we ask them to do so.

Again, put all these plays into practise, pooled them together in this playbook.

We actually started off selling it and it did really well, it was kinda like a timely thing in the EU. Again, the market's very different here.

Like, if you don't comply with the GDPR like it can get serious, like up to 4% of annual global revenues are the fines like, this probably isn't a toothless tiger regulation, this is the most significant data regulation we've ever seen globally.

So it did really well, John and Rose have been kind enough to, you know, offer this to you guys for free.

I'd say, worse case scenario, you know, take it, have a skim through, if something is of interest, dive a little bit deeper.

It's not like an ebook, you know, where you just sit on the train reading it. It really should be something that like you keep in your pocket, and it becomes useful when you have a job to be done at work.

That's the intention anyway, let me know if we live up to that promise, my word. Okay so where am I going with all of this? I think there will be more data sharing in the future. I think more data sharing is actually good. The problem today is that we're amidst this state of systemic mistrust. There is a power imbalance.

And you know, Facebook is part of contributing to that, but they are not alone, right? If you look at the World Economic Forum's work from 2011, they define personal data as a new economic asset class, brilliant, that's great. The key thing that they talk about when referencing how do we go from where we are today, to realising the value potential, and it's massive. Forecasts are huge, of data sharing.

And it's that we must design, consciously design, an inherently trustworthy ecosystem where individuals, us, organisations, and connected things, can share data, and participate in the value that sharing data creates.

Now we're a long way off of that.

So in the future, more data sharing, but we need to establish this trustworthy ecosystem. What are we doing to contribute to that? Well, we were basically working on an internal data trust design system, and then it got to the point we were like, we should probably just try and OpenSource this. And so that's what we're doing.

Principles, patterns, practises, to help organisations design transparent value generating consequence accepting products and services.

We actually released the first, oh.

We released the first pattern recently.

Those are our six guiding principles.

The thing at NAB a few weeks ago, right, one of the chaps, can't remember his name, but he was very entertaining, he came up with like this synthesised list of all of the design principles, and there was like 400 of them. I really don't mean to throw another six at you. These are used in a very specific context.

And I don't know how they compare to the other 400.

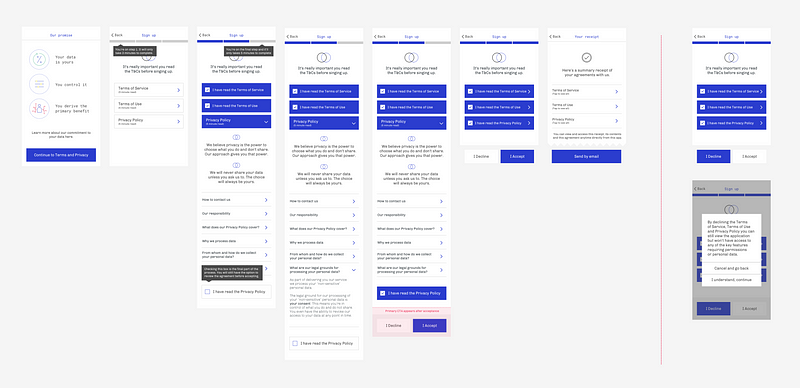

But for us they've been working really well. We released our first design pattern maybe about a week and a half ago? Two weeks ago? For upfront terms and conditions, why did we do that? Upfront terms and conditions are ubiquitous, they're absolutely broken, we know that.

If you head to our website, greaterthanexperience.designtopnavinsights that will take you straight to our medium blog.

It's probably to most recent blog on there from memory. Check that out, provide a bunch of guidance, and we're gonna release a number of other patterns. Upfront consent, just in time consent, consent revocation, zero knowledge proof, so stuff that's relevant to distributive ledger tech as well.

Why I'm here today though is, it's not even really about the data trust design system.

Like I would love for us to collectively come together and contribute to that, I think together we are stronger but, it's kinda because I'm aligned to Hillary and the belief that we are in a unique position of power.

We can actually influence the product services and experiences, that make their way into the hearts, minds, and wallets, of people all around the world.

And as Spiderman's uncle down here said, "With great power comes great responsibility". Now, Greater Than X, tiny little firm, we're only nine months old.

We can't do it alone, right? But together, potentially we can.

And that's what I put to you today.

The time to design for trust is absolutely now. But we cannot do it alone.

Now, talking about ethics, talking about trust, when I think about it, designing for trust is about designing for people.

It's about giving people power.

It's about respecting their agency, respecting their rights, and it's about giving them control.

It's the right thing to do, but it's also really good for business.

So if you're not totally motivated by just doing the right thing, and you need an economic incentive this might help. Trustworthy brands are inherently more meaningful. Meaningful brands out perform the stock market by 206% on average.

In fact, 50% of people will pay a premium to the brands that they trust the most. Designing for trust is not just good for people it's good for business.

And I believe that together, we can start designing for trust today.

Thank you very much.

(electronic music)