Speaking with Machines: Conversation as an Interaction Model

(synthesised music) - Yes, my name is Joe Toscano.

I was, up until 2017, June 2017, a consultant for Google doing experience design. Working for a consultancy called RGA and consulting with a bunch of our different product teams around the country, around the globe.

So I have won awards in conversational design. I left for the purpose of teaching people about ethics and I've started a foundation, a nonprofit called Design Good, where I am helping put trust back into the internet. Three part mission, which is, one, to help the general public understand what's going on. Two, to provide technologists with the knowledge, the resources, and a language to sell more ethical ideas to their bosses and into the product road map. And three, to help policy makers understand what's going on so that we can maintain innovation at a good pace and also have fun while we're doing it.

And I've written a book called Automating Humanity in order to start this.

But what I'm here today to talk about is conversation design, which is where I've won my awards. One is for BotBot.

If you haven't heard of BotBot, have any of you heard of it? Maybe a couple.

This was for Reply.ai and we created a bot to help people build their bots.

So instead of doing it through a GUI interface of click-and-drag or coding it, hard coding it yourself, this was something where you could come in and have a conversation with it, and it would build your bot.

And next year, I will be releasing second book, called We Need To Talk.

And the ethical foundations of conversation design, how you bring it to life.

And this is what we're gonna go over today. So we'll start off with what's happening in the industry. I'm sure many of you have heard a lot of trends on Chatbots, and you've seen or probably interacted with many. Many of which you've probably been like, "This is not that great.

"How do I make this better?" But I'll show you some cool ones that are out there that maybe can give you some inspo.

And this is what I'm also gonna be talking about today. So this one, for example, this is Bishop, by Autodesk. Bishop allows people working with Autodesk to speak to the machine and help them engineer things. In the near future, we'll be able to give these machines constraints and they will extend their minds beyond our natural capabilities.

Adobe Sensei, have anyone heard of this? So we've all been using Cloud for awhile, right? Adobe has been using that data to help train algorithms that can help you design by speaking to machines.

And that's what Adobe Sensei will be.

What this can do long-term is these machines can extend our minds, like I said, and help us think of different things we may have never thought before, do multiple iterations in very short periods of time, and many other things that we couldn't predict until maybe the product comes out.

There's also the fact of it's helping us make inclusive and accessible technologies. There are some people that don't have all the modalities that maybe some of us in the room do.

We can extend that into a machine and have the work done for them.

This is Soul Machines.

How many of you have heard of Soul Machines? Small subset.

They are from New Zealand.

They're now, I believe recently they started working with IBM into the medical space. But they started, and they've remapped the human facial muscles to create a render that looks and moves just like us. What they've done is they've used that to help the New Zealand disability insurance schema work through their system.

This makes it so that people don't have to get on a phone call and work on a phone operator.

They can just work with a screen and work with an avatar which then can monitor them and make sure that maybe they fell asleep, they can pause the experience.

If they see that the person gets agitated, it can change the reactions and it can bring it to life in different way. If they can't hear, it's printed on a screen and allows them to interact.

Lastly, it lets us get away from screens.

It's something that we've been working on this engagement model, getting people hooked to a screen for a long time and conversation has the ability to get us away from screens in many ways, but still enable meaningful interactions.

Now that we see this wise conversation going to be that tool because yeah, those are ideal scenarios, but why is conversation the next step in human-computer interaction models? Something that gets missed in the process of Chatbot conversation.

If you think of a short history of human-computer interaction models, back when computers first came out, we were working in binary.

This is not very human friendly, but this is the only way that we had to communicate and interact with machines.

There's a reason though, why the public didn't pick this up. Because we don't speak like this, we don't think like this, it was hard, it was obnoxious, no one wanted to touch it. The late 60s and 70s, we get into command line code, which is a little bit closer to English and the way that we speak, but still pretty far off.

Far enough that the public didn't wanna pick it up. It wasn't until the late 70s, early 80s when we saw graphical computing come around, that allowed people to interact with machines in a way that they enjoyed.

And that was easy and usable.

Even a toddler can point at something and scream and tell you what they want.

With GUIs, all we had to do was point and click. So it's much, much easier.

More recently with the mobile computing era, we've gotten into touch and gestural interactions which people have claimed maybe is the most intuitive interface, but I would argue, not so much.

If you look at many people across the internet, they struggle with these interfaces because there's no signifiers, there's no affordances on how to interact with them.

We're just expected to know.

Now for younger generations, or people who are in the field, this may be easy and definitely the truth, but for a lot of people it's not.

The reason why we'll have conversation voice as the future of interaction models, is because it's the first time in computing history that we are not learning the machine's language, we're not learning the machine's system.

The machine is learning our language, and in a sense, our system.

And if you look at search results from Google, you're seeing that more people today are using voice than ever before.

More than 75% are in natural language queries. Even when they're searching, they're searching for like, "What's the best burger place near me?" Not like, "Burger place, Sydney, Australia." Natural language queries.

And in the recent years, they've seen voice production go up by nearly double in many ways.

Next, understanding language.

Now that we see that this is the future of interaction models, once we figure it out, we need to understand the fundamentals of it before we can try to reverse engineer it.

How do we understand language? This is gonna be a psychological breakdown of how we actually think about words, sentences, letters, everything. And we're gonna start with a letter.

So letter is the foundation of a lot of this stuff. When we're looking at language, it's the letter. And if you think about a letter, a letter is just a visual symbol.

That L is just two lines connected and this type happens to be a little fancier than normal. Just a little different.

But at the end of the day, it's just a visual symbol. If you don't know what that letter means, or how it sounds, it's absolutely meaningless. But we recognise letters in pattern recognition, just the way that machines do.

Through recursive strategy.

So if I give you this form here on the far left, you can look at that and begin to guess what letter out of the 26 letters in the alphabet that might be. If I show you more, you get a better opportunity to understand it. And if I show you more, now you know.

Taking it from 26 to about five or six, to now you know.

Just a few steps.

But going back to how this is a visual symbol, once you learn that form, you can see in any other different form, and it's still the same letter despite being a completely different visual symbol. That's pattern recognition, and that's just part of us. Once you go past letters, we get into words. Words are the compounding of letters put together, and trying to understand that is much more complex. Because things don't sound exactly like they look. Cat does not sound like C-A-T, but that's how we understand it.

However, this is something we really love, putting words together, guessing them.

For example, I can give you all these and you can read 'em.

You might not get it immediately, but your mind can process it.

If I give you this, it's a little easier.

It's more immediate.

(audience laughs) Same goes for this.

Now take a second, I bet you you can read that. Might take you a second to start processing it, but your mind can recognise the patterns in this and begin to guess what it's saying.

If I gave you that, it makes it really, really easy though. We love this so much that we make games out of it. You have crosswords, you have word searches, in the States, we have Wheel of Fortune, where people guess letters and words.

We love this, it's part of being human.

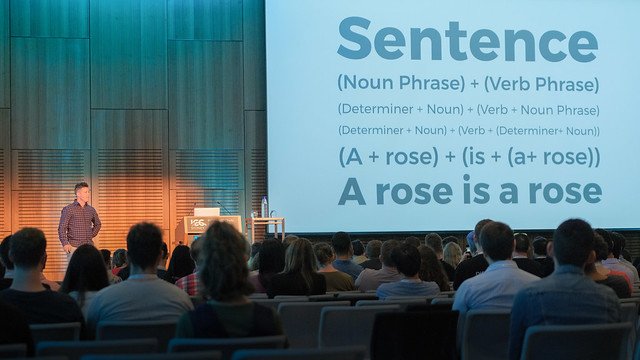

But when you get to the form of sentence, it's so abstract and so complex.

This is why we're struggling with language processing and making conversational interfaces.

In the English language, this is the base of a sentence. Right here, the fourth one down.

That's the base equation of a sentence to make a legitimate sentence, needs those five pieces. Now, do we have any engineers in the room? I don't know if anyone switched over from the Dev side. Yeah, cool, a few.

I know that looks like an easy equation, it's really, really not.

That's the problem that we're struggling with, is language isn't logical.

It's very illogical.

We speak in broken phrases and broken sentences, we have slang, we have nuances that you can't really programme into a computer very easily. Also consider the complexity of just this one sentence. When you consider that in the English language, we have 20 determiners, more than 17,000, 170,000 nouns, and more than 4,000 verbs.

You get 46.2 quadrillion different ways to make a five word sentence.

And the average sentence in the English language is 20 words long.

So I'll let you do the math on that one.

(audience laughs) Now you can begin to understand why it's so impressive, what people are doing at Google, and Amazon, and Apple with Siri, and Alexa, and Assistant, and all these services. It's not perfect, but they're doing pretty damn well. This is also why it's so challenging for smaller companies who lack the data to build these services, from scratch at least.

From there though, once you've built it, you have to consider the personality of the conversation that you're trying to define. Now people, they say, "We don't want to build a personality, "it should be a robot." Even Google Assistant, for example, Google Assistant doesn't have a personality, but in not having a personality, it absolutely does. And Google did that intentionally.

If you talk to Google people, if you really think about it, Assistant was made to be the future of search. And it is intentionally designed to not have a personality. But we as humans will attribute personality to everything that we interact with.

How many times have you talked to your dog recently? Or your car, or your phone, or anything.

We make names for thing, we just do this, for no reason that we really understand, but we do. So people are gonna do it to your conversational interface and your bot. And it's something that you should take advantage of and leverage with your brand.

Step one is defining voice.

This is how we define personality.

And this comes down to the very foundational elements here. With audio only experiences, you have the affordances of pitch, range, volume, and speed. So for example, there's a difference from me saying, "Oh, dear." Compared to me saying, "Oh, deer!" Now that also has some body language, but it also goes with the pitch, range, speed, and volume of the way I speak.

And that defines personality and interactions in ways that we don't ever really think about consciously, but we've learned over our lifetimes.

Then when you have text, there's even more layers to it and there's even more affordances for us as conversational designers.

This comes into word choice, content choice, punctuation, grammar.

These are just basic English class 101.

But we tend to forget them because we get so embedded in the technological capabilities of these things we forget like, actually, there's just some really sound fundamentals that we haven't thought about for awhile, since maybe grade school.

But in these, we can define people's personalities very easily. We can tell where they're from, their origin. Not necessarily where they live now, but their origin.

For example, in the United States, there's a difference between someone who says Coke, pop, and soda.

Coke is more in the South, pop is maybe like the Midwest, soda is typically on the coasts.

There's a difference in emotional states that we can very easily tell.

The way we speak, the way we text.

And same with gender, it's very easy to tell gender differences.

For example, women tend to be much more polite than men. Kind of a duh, right? We all kinda know that.

Don't get confused.

People will define your experience for you in their mind, whether you want them to or not.

So like I said, it's an opportunity to leverage this for your brand and extend it into a meaningful way. Let's think about more about character development. Once we know the foundations, we can put it together and actually build a character. And what I tell people here, is listen to popular culture. Look to popular culture.

Especially the radio.

Radio hosts do a great job, or podcast hosts, of defining a personality on-air, with no visual affordances and nothing really prepping them besides their voice.

So it's a great way to understand how to define a personality.

But beyond that, you can even look to TV, and how they define characters.

So an example I give to show how important it is that we express diversity in these, is if you look at the United States in the 90s, popular culture shows like, Friends, Seinfeld, Full House, and it painted this world of white people, male patriarchy, and that was popular culture, which that ended up defining culture.

Nowadays, we have shows like Modern Family, Black-ish, Jane the Virgin. As we create bots, conversational agents that then express personalities in different ways of life, we will begin to teach people how to interact with those personalities and those different ways of life, those cultures, those thought processes, et cetera, et cetera.

And if we don't include them, we may also risk losing them.

When you talk to say, like a kid who's growing up on these machines, we have to understand how we're beginning to train them to speak, how we're beginning to think about things and training them to think about interactions with other people, and how that may play out.

We're growing up, we're having kids, it's been proven that we're having kids grow up and be much more demanding in many times than we were before.

Next, is personas.

So personas, this is very, very important in conversation design, just as it is with regular design.

But if you wanna think about persona, we can look at maybe like, Batman.

Creating a persona comes down to defining a back story. Giving them various sets of pitch, range, volume, and speed. Maybe making some quotes about how Batman interacts in different situations. And you could imagine extending this out even more so. Maybe when Batman's scared, Batman acts like this, or speaks like this.

Maybe when Batman's happy, Batman acts like this, or acts like that.

Now what this does, is just like personas in your business now, it gives something that your team can latch on to and always have as a centre point.

It allows you to bring on new team members and bring them up to speed faster, it allows you to transfer on to people that maybe you need to outsource to, and do for production.

And have the consistency within your brand and your product. Next, talking about designing conversations, so steps to get there.

There's three main keys to designing conversation. One is to initiate a conversation.

The second point is to listen.

And the third is to respond.

One of these is much, much easier than the others. Initiating is like, a duh.

We all know right now you say, "Hey Google, hey Siri", whatever that may be, but long-term, this is gonna go away more than likely. If you look at recently the continued conversation with Alexa or with Google, all these services, they're getting to a point where they're just gonna be listening.

You will speak to that agent in the context of your life and it will begin to understand, or at least that's the goal.

There you go.

But we also have to think about how this affects business opportunity.

For example, speaking to a machine, we don't recognise character base anymore.

So startups could create all these funky, creative names, and I can look them up on the internet, but when you talk about it in audio, there's only one noise for this sound.

Netflix sounds like Netflix.

Unless you want people crying to the machine, there's no difference between Netflix and Netflix. (audience laughs) We have to think about that when we're making hot words and different keys. Active listening.

This is the struggle.

This is one of the biggest struggles of creating these right now.

They often gets overlooked.

It's a struggle because we struggle with it in real life. We all struggle with listening, and that's okay. But here's how you can make a bot.

Do this, or make it easier.

For a machine, we need a lot of different inputs in order to make something happen.

And let's talk about travel here, we'll just give an example, and on the left here are all the variables you may need to greet someone and create a travel experience. But that means I would have to go up to Assistant and say, "Hey, I need a roundtrip plane ticket "from San Francisco, California to Honolulu, Hawaii "for one adult, from August 26th, 2018 "to September 7th, 2018." And not a single one of us have ever spoken like that in our lives.

(audience laughs) Right? Right.

It's our job, as the conversational designers to help make that easier and to extract what we need out of the person, to just have a good conversation.

For example, realistic input, they're gonna say, "I wanna go to book a flight to Hawaii." Or if you're talking to your friend, you might say, "Hey, I'm thinking about going to Hawaii." Not even, "I want to book it." So how do you extend that conversation? Well, for example, you could say, you hear, "I wanna book a flight to Hawaii", you could say, "I can get you a flight to Hawaii.

"Which island were you thinking about?" What you're doing is you're confirming, "I heard you say, you wanna go to Hawaii." You're giving them a receipt for what they just said. But then you're asking them what airport, because actually, there's seven different airports you could go into through Hawaii.

Then when it comes to listening, you also have to consider that right now, we have a lot of experiences that are focused on grabbing one piece of information, and running with it.

Because we're used to on the web, trying to create the fastest load time on a page. So we wanna get them back and forth as quick as possible. That's not the same with conversation.

People are okay with waiting, they would much rather have the correct answer than the quickest answer.

So people speak in broken sentences, what you could do is you create an event listener to the keystrokes to see if they are, in fact still thinking.

Give them a second or two to maybe continue thinking if they need to.

And once you find that they're done, you grab the whole piece of it, and you analyse that. What you do in the meantime, is you throw up these dots, these listening dots. We've all seen those before when we're texting someone or messaging with someone, and what do you do when someone throws, when that happens? You're looking at your screen, and you're texting someone back and forth, you get those dots, you're like, "Just text me back, like, come on, "what are you doin'?" That's what people are gonna do here too.

They're okay with waiting, if they have the right response. And if it's in a meaningful amount of time. In terms of responding, there's also some things here to think about. So when it comes to responding, you wanna think about quantity, relevance, and clarity. Quantity comes down to, we don't want a long wall of text coming at us. We don't want a five minute monologue.

None of us do.

Probably don't even wanna listen to me for the rest of this talk, right? We have to be conscious of that when we're creating an experience, and be attentive to their attention span and their desires. Ideal is typically less than 250 characters right now is pretty standard in many ways.

Relevance, keeping on topic.

We think in conversational threads.

So we might be talking about a Rugby game here or something like that, you're gonna continue down the whole experience of that Rugby idea until you pivot in your conversation into another conversational thread, another idea. So keep it on topic while you're speaking to someone. If you're just talking about Rugby and then all of a sudden pop out about your newborn, people are gonna be like, "What'd that come out of? "Where'd that come from?" Next and last is clarity.

Clarity comes down to putting it in a way that can be easily digestible.

We don't really think about it, but the way that we speak is very efficient. The way things roll off our tongue, we have that expression, "It just rolled off my tongue." That's absolutely a truth to it.

So sometimes when we write, it doesn't sound or interact as well as when we actually speak it out loud. Something I talk to people about is go read your script. Say it out loud, and then if you stumble, then hey, someone else is probably gonna stumble too. But what does this look like? In terms of quantity, like I said, we don't want a paywall. We don't want something that's so long that we can't keep up with.

In terms of relevant, keep it on topic.

You don't just wanna spurt out random information and sound like you're schizophrenic.

And in terms of clarity here, if you spoke like this, it's gonna be kind of troubling if someone is trying to listen.

(audience laughs) Just be conscious of that.

You can have very simple responses, quick back and forth digestible content, allows people to seamlessly move through an experience and feel like they're talking to a friend.

And it doesn't take that much work.

Last is systems anatomy.

This is where I talk about moving beyond just conversation as a Chatbot. Because they think there's this trend right now to assume that conversation as an interaction model must mean that we're gonna develop something in Messenger. This is what most Chatbots look like.

But I personally don't believe this is the future. I think this is the Walkman to what will be our iPod era of conversational interactions. Now if you think about what's happening on the back end, really, any intelligent experience is just an input given to an intelligent system, which then gets processed and gives us an output. Basics 101.

Now, it gets much more complex than that, when you actually develop it, but if you're thinking about it from a design perspective in a touch point of an ecosystem, this is what it is.

The challenge is that we have so many touch points nowadays, where does this meaningfully extend? Where does it end? Now you're seeing a bunch of companies test a bunch of different things in different ways that they're not fully sure, but they have a bunch of money to test it.

For example, we have a Echo Show, which now has a screen.

You have Google going into LG products, and different Sony, Sony's TVs, and headphones, and recently, Amazon's even getting into your microwave. They're just throwing things in, because they can. But where this is gonna be meaningful is the reflection of us.

That's what these machines always have been and always will be.

They can only do what we do, and they will only be as enjoyable as we enjoy them. Now what that means is, sometimes there's gonna be an opportunity to speak in conversation that's better than others. If I'm on a crowded, busy train with a bunch of people around me, I don't wanna be speaking to a machine.

Maybe there's a private time when I can, but other times, that would be better for text. Sometimes it's better to have a screen.

That's why they're getting into this.

Sometimes it's better or easier, for example, if I'm listening to music, to go press the Next button, than it is for me to say, "Hey Alexa, go to the next song." We have to think in how we need to input, and how we want the output to come.

And sometimes we need to think about the sensory experiences that we are interacting with and how we can make that more enjoyable for people as a combination.

What we're going to head into is an era of ambient computing where interactions are just into everything, all the touch points, and AI is running in the background. And it's up to us as the designers and the engineers of these systems to really think it through and make something that is enjoyable, if we want consumers to adopt it. This has been a high level of what I will be covering in my book next year when it launches.

But I always end the talk with, we're here for a reason.

You're all ambitious people who are trying to do something better either with your career, or with your product team, whatever that may be.

I challenge you to create the future that you wanna see. Because whether you are actively participating or not, you are actively participating, creating that future. That's my challenge, and that's how I like to end it.

Create the future you wanna be a part of.

That's it for me, and hope you enjoyed it.

Please reach out if you wanna talk.

(audience applauds) (synthesised music)

This talk will explain why conversation will play such a large role in the future, define how it will happen, and suggest how you can integrate conversation into your product roadmap.

Why is conversation the next step in human/computer interaction?

If you think of a short history of HCI, we started from the true primitive – binary code. We moved along to command line code, which was better but still not mainstream-accessible. It was GUIs and point-and-click that really made computers accessible to the average person. Next came touch and gesture on mobiles and tablets.

The next step is conversation and voice. It’s the first time in computing history that the machine is learning our language, instead of the other way around. Of course that means we need to really understand language.

- Starting with a letter: a letter is a symbol that has a defined meaning, based on the collective agreement of a language community. Once a human learns a letter, we can recognise that pattern even if the typeface changes.

- Words: a group of letters that the majority of people in a language community agree how to pronounce.

- Sentences: combinations of words… this is where machines really struggle, because sentences have phrasing, context, slang, all kinds of nuance that computers struggle to understand.

There are 46.2 quadrillion ways to create a five-word sentence.

Personifying conversation is another challenge. Bots have personalities – even choosing for it to have ‘no personality’ is a choice and a form of personality. Humans will attribute personality to everything around us. It’s innate.

Defining voice is the first step of defining personality. You can use pitch, range, volume, speed in audio; in text and audio you have word choice, content choice, punctuation and grammar.

We can define personalities and know where people are from based on words they use – the choice of words between coke, pop and soda will tell you roughly where an American grew up. Women are more polite than men.

Character development comes next. Look to popular culture – radio and podcast hosts define a personality with nothing more than voice/sound. TV does it too.

Just as TV set cultural norms, conversational interfaces will play a huge role in the way children grow up. Kids are growing up to be more demanding. Could there be a link to using conversational interfaces?

Batman is a great character. Back story, quotes, voice… all highly recognisable.

Designing conversations…

- initiate

- listen

- respond

Initiating isn’t too hard at the moment – ‘hey siri’, ‘hey google’ – but it will get trickier, where the device will just be listening and you’ll speak to it in context.

One odd impact of voice is that character-based names don’t work. Brands which use tricky spelling don’t work when they’re read out, they just sound like ‘tumbler’ and ‘net flicks’.

Another problem is you may need a lot of information to do something like book a flight. People don’t speak the way the query needs to be constructed. In reality you have to go back and forward to clarify things, to get details and prompt for more information.

Responding has to be considered as well: quantity, relevance and clarity. Don’t give us a five minute monologue.

great catchcry from Joe Toscano at #wds18 ‘Create the future you want to see!’

— Philippa Costigan (@CosPip) November 1, 2018